Worried about the performance and reliability of your data-intensive application?

A Capgemini research shows that only 13% of organizations have achieved full-scale production for their Data-Intensive applications (DIA). In particular the research refers to applications using Big Data implementations, such as Hadoop MapReduce, Apache Storm or Apache Spark. Apart of the correct deployment and optimization of a DIA, software engineers face the problem of achieving performance and reliability requirements. Definitely, a framework to assist in guaranteeing these requirements in the very early phases of the development could be of great help. Consider that in later phases, the ecosystem of a cluster is not completely controllable. Therefore, predictions of throughputs, service times or scalabilities with varying number of users, workloads, network traffic or failures are a need. Within the DICE project, Simulation tool has been developed to help achieve that.

If you are looking for a quality-driven framework for DIA development, the Simulation tool [1] of the DICE project can be your choice. This tool makes it easier to simulate the behavior of the system prior to the deployment. Hence, you get a real-world testbed that allows the performance assessment of the DIA. The Simulation tool features:

- Prediction of performance metrics: throughput, utilization or service time;

- Detection of performance bottlenecks;

- Detection of reliability issues.

Once the software developers get the simulation results, they can consequently configure, adapt, or optimize their DIA to the specific execution context. The Simulation tool offers a modeling environment integrated within the Papyrus Eclipse tool. It guides the software developer through the design and analysis phases. The Simulation tool covers all the steps of a simulation workflow, as follows:

- The modeling with high-level description languages, in particular UML, using a novel profile for describing the parameters and characteristics of the system,

- the transformation to performance models, specifically to Stochastic Petri Nets, that are suitable for prediction, and

- last but not least, the analysis of the model and the retrieval of the performance results.

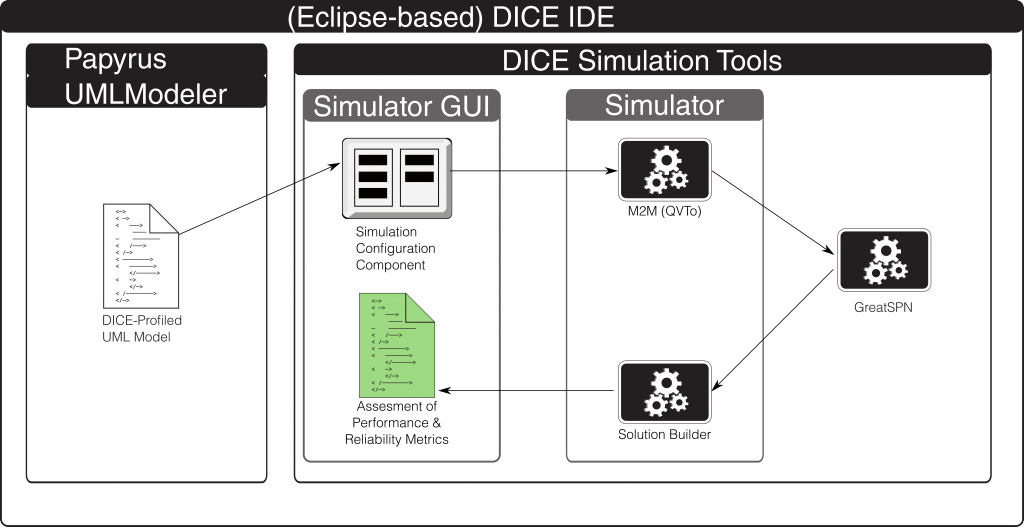

The following image offers an overview of the simulation workflow with the internal tools, modules, and configurations. The transformation of UML to a Stochastic Petri Net is done by a model-to-model (M2M) transformation using the QVTo language. The Stochastic Petri Net is analyzed by the GreatSPN tool, that produces the performace results.

The Simulation Tool has been integrated within the DICE IDE, but it can also be used as a stand-alone application. Currently, the Simulation tool supports platform-independent models as well as the Storm technology. We plan to extend the technology support to Apache Spark, Tez and Hadoop in the following releases. For more details about the Simulation tool, please visit our Github page.

José Merseguer, José I. Requeno and Diego Pérez (ZAR)

References

- A. Gómez, C. Joubert and J. Merseguer. A Tool for Assessing Performance Requirements of Data-Intensive Applications. XXIV Jornadas de Concurrencia y Sistemas Distribuidos (JCSD 2016).

Sorry, the comment form is closed at this time.