The advent of cloud computing triggered a huge change in software release cycles for an increasing number of companies embracing cloud technologies as the 21st century’s technological utility… Where once your company invested in large, upfront investments in physical servers, that same strategy is increasingly being replaced by on-demand and pay-per-use cloud access – at the same time, complex manual deployment procedures are increasingly being automated in the context of DevOps and connected technologies… What is the organizational and technical consequence of these phenomena?

Simple… Massive need for monitoring!

Monitoring is increasingly becoming a key must-have for organizations that want to keep track of their operational software as much as they would with any other product. This need is more and more critical when considering Data Intensive Applications as their behavior and performance have to be kept under continuous control. Monitoring, therefore, is the one practice that allows eliciting the critical intelligence for competitive software strategies and long-term innovation agendas. One question follows: What is the status of monitoring practices for modern IT Clouds?

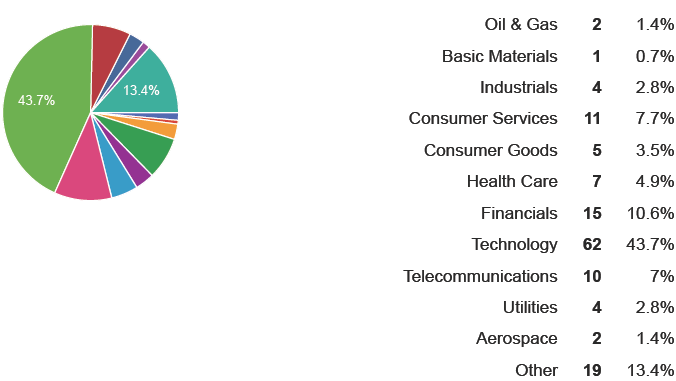

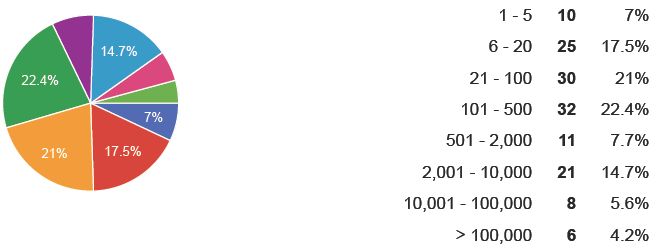

In the scope of our work within the DICE H2020 Project and in continuation of our work concerning the EU FP7 MODAClouds Project, we surveyed what practitioners in the cloud trenches are experiencing. We conducted a survey in 2016 advertising it through various channels including Reddit and other blogs for developers and operators (e.g., yCombinator, StackOverflow). We got a statistically relevant set of 144 answers by practitioners working for as many companies, which span from SMEs using the cloud to large cloud service vendors up to several of the so-called “Fortune 500” companies, i.e., big pro’s in the market, dictating the standards when it comes to clouds. The figures for our sample reflect a huge variety of practitioners aggregated in a statistically relevant set which is organized as highlighted in Figures 1 and 2.

Fig.1: operational market sectors across our sample.

Fig.2: size of companies across our sample.

What our respondents see is a radical shift in how software organizations are being structured and even how software is now architected towards the use of cloud resources accompanied by largely ad-hoc monitoring infrastructures. They told us that, on one hand, small companies usually prefer cloud hosting solutions and tend to experiment with more recent technologies like Docker containers rather than investing in monitoring. On the other hand, medium to large companies form specific operator roles entrusted with receiving alerts from their own, often if not always “hand-made” monitoring system.

Operators are usually self-propelled (i.e., self-educated) and skilled enough to set up a monitoring platform using one or more open-source monitoring tools and design patterns (think of the widely known ELK stack) for both system and application metrics, which are usually accessed by operators only. In summary, these companies invest a lot in monitoring, especially in relation to the financial areas of the market – some even make use of more advanced machine-learning and Big Data analytics techniques for analyzing monitored data to a variety of purposes (service improvement and innovation, mainly).

Not surprisingly, through our survey we observed that big players are aware of their monitoring infrastructure shortcomings and are thinking seriously on improving and investing heavily to do it with small to massive investments depending on size, ranging from 10%-20% (for most SMEs) to 80% (for top IT cloud players) of operational costs.

However, at the same time, big industrial players remain largely unaware of the observability, that is, the ability to monitor and keep track of software via automated means of their cloud software’s architectures. Similarly, even the biggest players remain completely unconscious as to how their application complexity is gaining grounds over the monitoring platform supposed to “watch” them and maintain their products alive. While on one hand we expected the observability to evolve jointly with architecture complexity, we noticed that there is erratic if no relation between these two key dimensions across our entire dataset.

On a final note, the number of companies which are either unaware of their monitoring shortcomings or not planning to address them, is remarkably high. For example, our data indicates that 1 out of 3 companies does in fact pay for storing, aggregating and manipulating historical cloud application monitoring data, but, at the same time, that company *does not* use that data for forward engineering and software evolution purposes.

Based on these preliminary findings, we argue that companies should carefully seek to prepare for the upcoming monitoring boom. At the same time, researchers should be prepared to address the technical and organizational challenges around these issues. While the technology to be applied for monitoring today exists, our evidence shows that the approaches, processes and skills needed to apply monitoring technology in concrete and diverse cases is still to be distilled from a large variety of mostly industrial research endeavours in the area.

In further continuation of our work, we plan to prepare a full report of our findings, while trying to address the issues above with the hope that more standardised and cheap monitoring solutions will emerge soon.

Damian A. Tamburri, Politecnico di Milano

Elisabetta Di Nitto, Politecnico di Milano

Marco Miglierina, Politecnico di Milano

Sorry, the comment form is closed at this time.